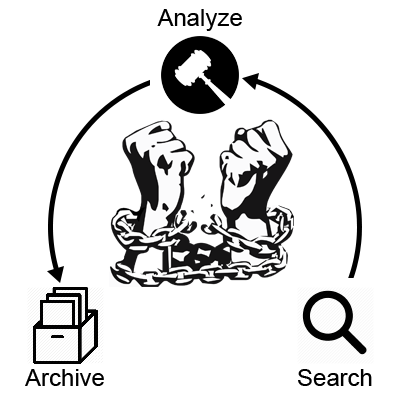

Tomáš Kocman

Kyberkriminalita, Archivace webu, Dolování dat

Bezpečnost Databáze a data-mining

Archivace dat z webu je užitečná pro ty případy, ve kterých si chceme udržovat měnící se informace o nějakém subjektu v čase. Tato práce umožňuje automatizovat archivaci webových stránek, ovšem jen těch, které splňují určitá pravidla – data na nich obsažená vyhovují definovaným regulárním výrazům. Výsledkem práce je platforma, kterou lze konfigurovat takovým způsobem, aby prohledávala a archivovala webové stránky podle různých strategií. Mějme například instituci jako muzeum nebo knihovnu, která by chtěla ukládat historii určitých dokumentů na webu. S platformou lze jednoduše automatizovaně navštívit všechny stránky na daném webu a pokud tyto stránky splňují definovaná pravidla, platforma provede jejich zálohu. V oblasti kyberkriminality například vyšetřovatelé znají webové stránky, popřípadě fórum, kde pachatel prováděl trestnou činnost. Potom můžou platformu využít k nalezení důkazního materiálu – internetovou přezdívku pachatele, obsah zpráv a další pro soud cenné informace.

Pavel Hřebíček

Mobilní aplikace, Eye Check, Leukokorie, Zdravé oči, iOS, Android, React Native, OpenCV, Dlib, REST

Uživatelská rozhraní Zpracování dat (obraz, zvuk, text apod.)

Cílem této práce je návrh a implementace multiplatformní multijazyčné mobilní aplikace pro rozpoznání leukokorie ze snímku lidského obličeje pro platformy iOS a Android. Leukokorie je bělavý svit zornice, který se při použití blesku může na fotografii objevit. Včasnou detekcí tohoto symptomu lze zachránit zrak člověka. Samotná aplikace umožňuje analyzovat fotografii uživatele a detekovat přítomnost leukokorie. Cílem aplikace je tedy analýza očí člověka, od čehož je také odvozen název mobilní aplikace - Eye Check. K vytvoření multiplatformní aplikace byl použit framework React Native. Pro detekci obličeje a práci s fotografií byly použity knihovny OpenCV a Dlib. Komunikace mezi klientem a serverem je řešena pomocí architektury REST. Výsledkem je mobilní aplikace, která při detekci leukokorie uživatele upozorní, že by měl navštívit svého lékaře.

Michal Koutenský

network architecture, RINA, rlite

Počítačové sítě

The purpose of this paper is twofold — first, to showcase my contributions to the rlite networking stack, and second, to inform readers about the existence of Recursive InterNetwork Architecture (RINA) and to serve as a quick and easy to read introduction to the architecture and its capabilities. It is well documented that the TCP/IP network architecture suffers from numerous deficiencies and does not meet the demands of modern computing. A great number of these issues are structural and cannot be properly solved by making adjustments to existing protocols or introducing new protocols into the stack. RINA is a clean slate architecture whose aim is to be a more general, robust and dynamic basis for building computer networks. I have extended the rlite implementation with support for policies. With this framework in place, it is possible to have multiple behaviours of components (such as routing), and change between these during runtime. This additional flexibility and simple extensibility greatly benefits both production deployment scenarios as well as research efforts. As policies are crucial for RINA, supporting them is an important milestone for the implementation, and will hopefully foster adoption and accelerate development of RINA as a viable replacement for current Internet.

Martin Vondráček

Network Traffic Analysis, Reverse Engineering, Application Crippling, Penetration Testing, Hacking, Social Applications, Unity, Bigscreen, HTC Vive, Oculus Rift

Bezpečnost Počítačové sítě

Immersive virtual reality is a technology that finds more and more areas of application. It is used not only for entertainment but also for work and social interaction where user's privacy and confidentiality of the information has a high priority. Unfortunately, security measures taken by software vendors are often not sufficient. This paper shows results of extensive security analysis of a popular VR application Bigscreen which has more than 500,000 users. We have utilised techniques of network traffic analysis, penetration testing, reverse engineering, and even application crippling. We have found critical vulnerabilities directly exposing the privacy of the users and allowing the attacker to take full control of a victim's computer. Found security flaws allowed distribution of malware and creation of a botnet using a computer worm. Our team has discovered a novel VR cyber attack Man-in-the-Room. We have also found a security vulnerability in the Unity engine. Our responsible disclosure has helped to mitigate the risks for more than half a million Bigscreen users and all affected Unity applications worldwide.

Daniel Uhříček

IoT, Malware, Linux, Security, Dynamic Analysis, Network Analysis, SystemTap

Bezpečnost

Weak security standards of IoT devices levereged Linux malware in past few years. Exposed telnet and ssh services with default passwords, outdated firmware or system vulnerabilities -- all of those are ways of letting attackers build botnets of thousands of compromised embedded devices. This paper emphasizes the importance of open source community in the field of malware analysis and presents design and implementation of multiplatform sandbox for automated malware analysis on Linux platform. Project LiSa (Linux Sandbox) is a modular system which outputs json data that can be further analyzed either manually or with pattern matching (e.g. with YARA) and serves as a tool to detect and classify Linux malware. LiSa was tested on recent IoT malware samples provided by Avast Software and it solved various problems of existing implementations.

Drahomír Dlabaja

Light field, Lossy compression, JPEG, Transform coding, Plenoptic representation, Quality assessment

Zpracování dat (obraz, zvuk, text apod.)

This paper proposes a light field image encoding solution based on four-dimensional discrete cosine transform and quantization. The solution is an extension to JPEG baseline compression. A light field image is interpreted and encoded as a four-dimensional volume to exploit both intra and inter view correlation. Solutions to 4D quantization and block traversal are introduced in this paper. The experiments compare the performance of the proposed solution against the compression of individual image views with JPEG and HEVC intra in terms of PSNR. Obtained results show that the proposed solution outperforms the reference encoders for light images with a low average disparity between views, therefore is suitable for images taken by lenslet based light field camera and images synthetically generated.

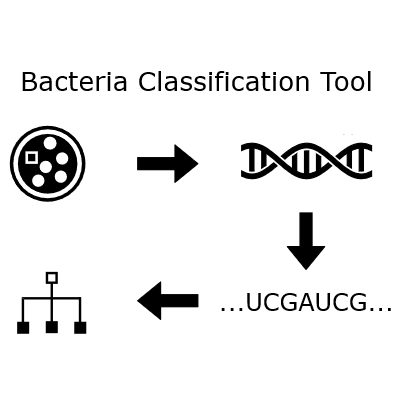

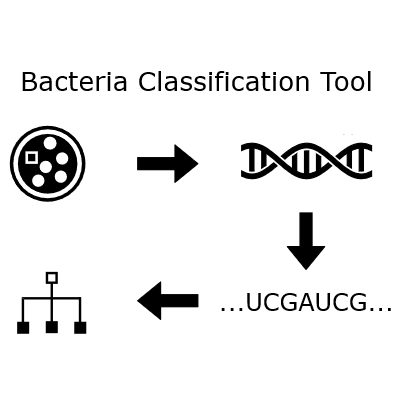

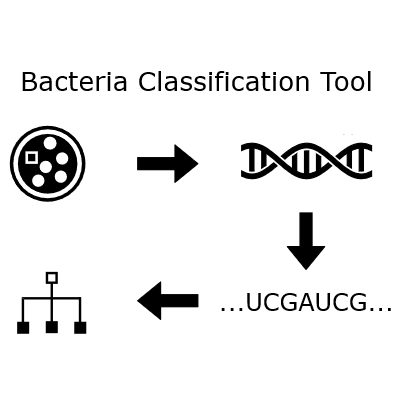

Nikola Valešová

Machine learning, Metagenomics, Bacteria classification, Phylogenetic tree, 16S rRNA, DNA sequencing, scikit-learn

Bioinformatika

This work deals with the problem of automated classification and recognition of bacteria after obtaining their DNA by the sequencing process. In the scope of this paper, a~new classification method based on the 16S rRNA gene segment is designed and described. The presented principle is based on the tree structure of taxonomic categories and uses well-known machine learning algorithms to classify bacteria into one of the connected classes at a~given taxonomic level. A~part of this work is also dedicated to implementation of the described algorithm and evaluation of its prediction accuracy. The performance of various classifier types and their settings is examined and the setting with the best accuracy is determined. Accuracy of the implemented algorithm is also compared to an existing method based on BLAST local alignment algorithm available in the QIIME microbiome analysis toolkit.

Patrik Goldschmidt

TCP Reset Cookies, TCP SYN Flood, DDoS mitigation

Počítačové sítě

TCP SYN Flood is one of the most widespread DoS attack types used on computer networks nowadays. As a possible countermeasure, this paper proposes a long-forgotten network-based mitigation method TCP Reset Cookies. The method utilizes the TCP three-way-handshake mechanism to establish a security association with a client before forwarding its SYN data. Since the nature of the algorithm requires client validation, all SYN segments from spoofed IP addresses are effectively discarded. From the perspective of a legitimate client, the first connection causes up to 1-second delay, but all consecutive SYN traffic is delayed only by circa 30 microseconds. The document provides a detailed description and analysis of this approach, as well as implementation details with enhanced security tweaks. The project was conducted as a part of security research by CESNET. The discussed implementation is already integrated into a DDoS protection solution deployed in CESNET's backbone network and Czech internet exchange point at NIX.CZ.

Pavel Kohout

P4, Stateful packet processing, FPGA

Počítačová architektura a vestavěné systémy Počítačové sítě

Research and development in area of network technologies allow to increase speed of network traffic up to 100 Gbps meanwhile requirements for its security and an easy administration stay the same. A process of collecting network traffic statistics is important part in the defense of a network infrastructures but its performing is difficult in a high-speed network environment. Nowadays, a P4 language becomes powerful tool for the network administrators thanks to the platform independence and its ability to describe whole packet processing pipeline. The aim of this work is to extend existing stateless solution developed at CESNET association target to FPGA platform by support of stateful processing at speed 100 Gbps. This paper describes the designed system architecture for stateful processing realization in P4 described device respecting requirements for its resources or rate. Performance testing has shown that device is capable of achieving the target throughput of 100 Gbps for limited number of used stateful memory requests in context of a table or an user action.

Tomáš Polášek

Hybrid Ray Tracing, DirectX Ray Tracing, Hardware Accelerated Ray Tracing

Počítačová grafika

The goal of this paper is to assess the usability of hardware accelerated ray tracing in near-future rendering engines. Specifically, the DirectX Ray Tracing API and Nvidia Turing GPU architecture are being examined. The assessment is accomplished by designing and implementing a hybrid rendering engine with support for hardware accelerated ray tracing. This engine is then used in implementing frequently used graphical effects, such as shadows, reflections and Ambient Occlusion. Second part of the evaluation is made in terms of difficulty of integration into a regular game engine - complexity of implementation and performance of the resulting system. There are two main contributions of this thesis, the first one being Hybrid Rendering engine called Quark, which uses hardware accelerated ray tracing to implement above-mentioned graphical effects. The hybrid-rendering approach uses rasterization to perform the bulk of the computation intensive operations, while allowing ray tracing to add additional information to the synthesized image. The second important contribution are the performance measurements of the final system, which include time spent on the ray tracing operations and number of rays cast for different input models. Presented system shows one possible way of using the Nvidia Turing Ray Tracing cores in generating more realistic images. Preliminary measurements of the rendering system show great potential of this new technology, with results of 5 to 12 GigaRays per second on RTX 2080 Ti. The largest problem so far is the integration of this technology into rasterization-based engines. Data needs to be prepared for ray tracing and manually accessed from ray tracing shaders. The second problem is the build-time of acceleration structures, which is in order of milliseconds, even for smaller models with around 50 thousand triangles.

Ondřej Valeš

finite automata, tree automata, language equivalence, language inclusion, bisimulation, antichains, bisimulation up-to congruence

Testování, analýza a verifikace

Tree automata and their languages find use in the field of formal verification and theorem proving but for many practical applications performance of existing algorithms for tree automata manipulation is unsatisfactory. In this work a novel algorithm for testing language equivalence and inclusion on tree automata is proposed and implemented as a module of the VATA library with a goal of creating algorithm that is comparatively faster than existing methods on at least a portion of real-world examples. First, existing approaches to equivalence and inclusion testing on both word and tree automata are examined. These existing approaches are then modified to create the bisimulation up-to congruence algorithm for tree automata. Efficiency of this new approach is compared with existing tree automata language equivalence and inclusion testing methods.

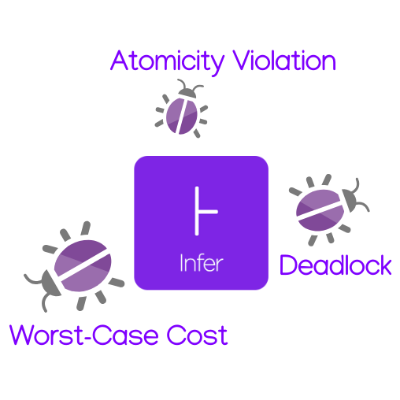

Dominik Harmim, Vladimír Marcin, Ondřej Pavela

Facebook Infer, Static Analysis, Abstract Interpretation, Atomicity Violation, Concurrent Programs, Performance, Worst-Case Cost, Deadlock

Testování, analýza a verifikace

Static analysis has nowadays become one of the most popular ways of catching bugs early in the modern software. However, reasonably precise static analyses do still often have problems with scaling to larger codebases. And efficient static analysers, such as Coverity or Code Sonar, are often proprietary and difficult to openly evaluate or extend. Facebook Infer offers a static analysis framework that is open source, extendable, and promoting efficient modular and incremental analysis. In this work, we propose three inter-procedural analysers extending the capabilities of Facebook Infer: Looper (a resource bounds analyser), L2D2 (a low-level deadlock detector), and Atomer (an atomicity violation analyser). We evaluated our analysers on both smaller hand-crafted examples as well as publicly available benchmarks derived from real-life low-level programs and obtained encouraging results. In particular, L2D2 attained 100 % detection rate and 11 % false positive rate on an extensive benchmark of hundreds of functions and millions of lines of code.

Josef Jon

neural machine translation, context, transformer, document level translation

Zpracování dat (obraz, zvuk, text apod.)

This works explores means of utilizing extra-sentential context in neural machine translation (NMT). Traditionally, NMT systems translate one source sentence to one target sentence without any notion of surrounding text. This is clearly insufficient and different from how humans translate text. For many high resource language pairs, NMT systems output is nowadays indistinguishable from human translations under certain (strict) conditions. One of the conditions is that evaluators see the sentences separately. When evaluating whole documents, even the best NMT systems still fall short of human translations. This motivates the research of employing document context in NMT, since there might not be much more space left to improve translations on sentence level, at least for high resource languages and domains. This work sumarizes recent state-of-the art approaches, implements them, evaluates them both in terms of general translation quality and on specific context related phenomena and analyzes their shortcomings. Additionaly, context phenomena test set for English to Czech translation was created to enable further comparison and analysis.

Son Hai Nguyen

Augmented Reality, Computer Vision, Image Processing

Zpracování dat (obraz, zvuk, text apod.)

Augmented reality visualizes additional information in real-world environment. Main goal is achieving natural looking of the inserted 2D graphics in a scene captured by a stationary camera with possibility of real time processing. Although several methods tackled foreground segmentation problem, many of them are not robust enough on diverse datasets. Modified background subtraction algorithm ViBe yields best visual results, but because of the nature of binary mask, edges of the segmented objects are coarse. In order to smooth edges, Global Sampling Matting is performed, this refinement greatly increased the perceptual quality of segmentation. Considering that the shadows are not classified by ViBe, artifacts were occurring after insertion of segmented objects on top of the graphics. This was solved by the proposed shadow segmentation, which was achieved by comparing the differences between brightness and gradients of the background model and the current frame. To remove plastic look of the inserted graphics, texture propagation has been proposed, that considers the local and mean brightness of the background. Segmentation algorithms and image matting algorithms are tested on various datasets. Resulted pipeline is demonstrated on a dataset of videos.

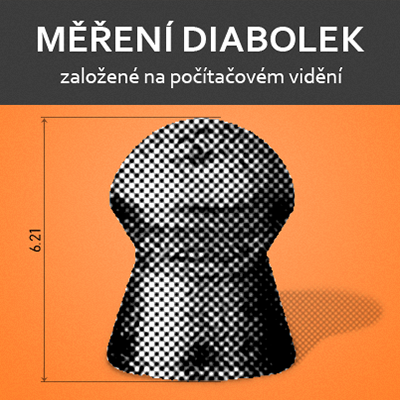

Martin Kruták

Object gauging, Sub-pixel edge detection, Industrial image processing

Zpracování dat (obraz, zvuk, text apod.)

In this paper, a method for dimensional gauging of pellets based on image processing with sub-pixel precision is introduced. The method is intended as a component of a quality control system for pellets. Object gauging from the image is on rise in demand in modern manufacturing processes. Very often, depending on the resulting precision, this is done physically in direct contact with the object itself. In case of soft objects that could be damaged in the process of gauging by standard methods (physical contact gauges) a non-contact method is needed. The method proposed in this paper uses means of sub-pixel edge detection in image combined with the interpolation-based edge methods. Resulting algorithm is fast with industry sufficient accuracy. Experimental results described in the paper show gauging accuracy of 25 micrometers for the side view and accuracy of 10 micrometers for the frontal view.

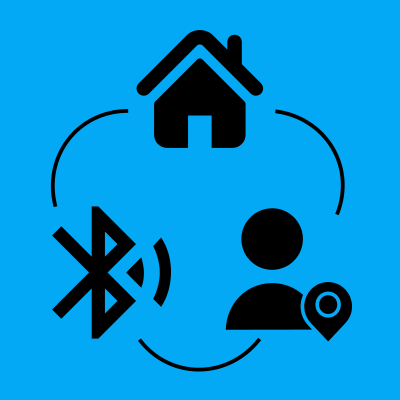

David Průdek

Detekce osob, IoT, Bluetooth, PIR, Home-Assistant, Domácí automatizace, ESP32, Vestavěný systém

Počítačová architektura a vestavěné systémy

Cílem této práce je navrhnout a implementovat senzor detekce přítomnosti osob v místnosti vhodný pro použití v domácí automatizaci. Zaměřil jsem se na nalezení takového řešení, které pro svou činnost využívá běžné nositelné elektroniky. Senzor umístěný v místnosti detekuje tato nositelná zařízení a na základě síly signálu určí jeho pozici. Pro tento případ užití jsem použil technologii Bluetooth LE, která bývá součástí většiny nositelné elektroniky a v poslední době se často využívá k navigaci ve vnitřních prostorech. Použití tohoto senzoru pro automatizaci je zajištěno pomocí systému Home-Assistant. Hlavním přínosem této práce je levně a jednoduše rozšířit možnosti běžné domácí automatizace detekci osob v jednotlivých místnostech, nikoli pouze v široké oblasti, kterou nabízejí lokace pomocí GPS nebo připojení k Wi-Fi přístupového bodu.

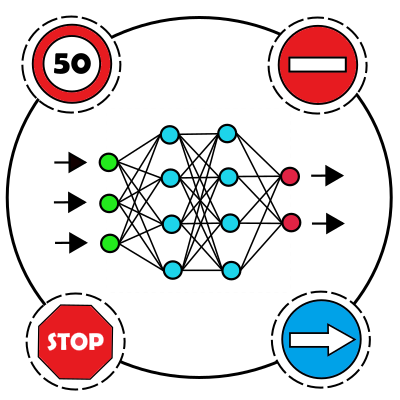

Filip Kočica

Konvoluční neuronová síť, YOLO, Detekce, Syntetická, Dopravní značka

Zpracování dat (obraz, zvuk, text apod.)

Tato práce řeší problematiku detekce dopravního značení za pomoci moderních technik zpracování obrazu. K řešení byla použita speciální architektura hluboké konvoluční neuronové sítě zvaná YOLO, tedy You Only Look Once, která řeší detekci i klasifikaci objektů v jednom kroce, což celý proces značně urychluje. Práce pojednává také o porovnání úspěšnosti modelů trénovaných na reálných a syntetických datových sadách. Podařilo se dosáhnout úspěšnosti 63.4% mAP při použití modelu trénovaného na reálných datech a úspěšnosti 82.3% mAP při použití modelu trénovaného na datech syntetických. Vyhodnocení jednoho snímku trvá na průměrně výkonném grafickém čipu ~40.4ms a na nadprůměrně výkonném čipu ~3.9ms. Přínosem této práce je skutečnost, že model neuronové sítě trénovaný na syntetických datech může za určitých podmínek dosahovat podobných či lepších výsledků, než model trénovaný na reálných datech. To může usnadnit proces tvorby detektoru o nutnost anotovat velké množství obrázků.

Miroslav Karásek

Augmented reality, Measurement, Android, ARCore, Point Cloud

Počítačová grafika Uživatelská rozhraní

This article describes the technology for automatic measurement of overall dimensions of a generic object using a commercially available mobile phone. The user only has to go around the measured object and scan it by the mobile phone’s camera. The measurement uses computer vision algorithms for scene reconstruction in order to obtain the object’s point cloud. This paper proposes algorithms for processing the point cloud and for estimating the dimensions of the object. It focuses on collecting and filtering points where the biggest challenge is to recognize and separate points belonging to the measured object from the rest. Proposed algorithms were tested on an implemented Android application using ARCore technology. The result of measurement on a cuboid object had the error about 1cm. An object with other shapes achieves measurement error from 3 to 5cm. The measurement error was independent on object size. This implies that the algorithm is more accurate for larger objects with the shape approaching cuboid.

Tomáš Chocholatý

Detekce dopravních značek, Konvoluční neuronové sítě, Dektekce objektů v obraze

Zpracování dat (obraz, zvuk, text apod.)

Článek se zabývá detekcí dopravních značek v~obraze. Cílem této práce je vytvoření vhodného detektoru pro detekci a rozpoznání dopravního značení v~reálném provozu. Problematika detekce je řešena pomocí konvolučních neuronových sítí (CNN). Za účelem trénování neuronových sítí byly vytvořeny vhodné datové sady, které se skládají ze syntetického i reálného datasetu. Pro zhodnocení kvality detekce byl vytvořen program kvantitativního vyhodnocování.

Šimon Stupinský

Performance, Continuous integration, Non-parametric analysis, Regressogram, Moving average, Kernel regression, Automated changes detection, Difference analysis

Testování, analýza a verifikace

Current tools that manage project performance do not provide a satisfying evaluation of the overall performance history, which is often crucial when developing large applications. In our previous work, we introduced a tool-chain that collected set of performance data extrapolated these data into a performance model represented as a function of two depending variables and compared the result with the model of the previous version reporting possible performance changes. The solution was, however, dependent on precisely specifying and measuring those dependent variables. In this work, we propose a more flexible approach of computing performance models based on collected data and a subsequent check for performance changes that requires only one measured kind of variable. We evaluated our solution on different versions of vim, and we were able to detect a known issue in one of the versions as well as verify that between two stable versions there were no significant performance changes.

Daniel Uhříček

IoT, Malware, Linux, Security, Dynamic Analysis, Network Analysis, SystemTap

Bezpečnost

Weak security standards of IoT devices levereged Linux malware in past few years. Exposed telnet and ssh services with default passwords, outdated firmware or system vulnerabilities -- all of those are ways of letting attackers build botnets of thousands of compromised embedded devices. This paper emphasizes the importance of open source community in the field of malware analysis and presents design and implementation of multiplatform sandbox for automated malware analysis on Linux platform. Project LiSa (Linux Sandbox) is a modular system which outputs json data that can be further analyzed either manually or with pattern matching (e.g. with YARA) and serves as a tool to detect and classify Linux malware. LiSa was tested on recent IoT malware samples provided by Avast Software and it solved various problems of existing implementations.

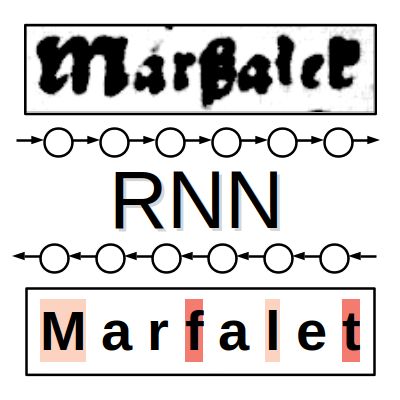

Jan Kohút

rozpoznávání textu, rekurentní neuronové sítě, konvoluční neuronové sítě, adaptace, aktivní učení, dataset IMPACT

Robotika a umělá inteligence Zpracování dat (obraz, zvuk, text apod.)

Cílem této práce je srovnání architektur neuronových sítí pro rozpoznávání textu. Dále pak adaptace neuronových sítí na jiné texty, než na kterých byly učeny. Pro tyto experimenty využívám rozsáhlý a rozmanitý dataset IMPACT o více než jednom milionu řádků. Pomocí neuronových sítí provádím kontrolu vhodnosti řádků tzn. čitelnost a správnost výřezů řádků. Celkem srovnávám 6 čistě konvolučních sítí a 9 rekurentních sítí. Adaptace provádím na polských historických textech s~tím, že trénovací data adaptovaných sítí neobsahovaly texty ve slovanských jazycích. Adaptace využívají přístupy aktivního učení pro výběr nových adaptačních dat. Čistě konvoluční sítě dosahují úspěšnosti 98.6 \%, rekurentní sítě pak 99.5 \%. Úspěšnost sítí před adaptací se pohybuje kolem 79\%, po postupné adaptaci na 2500 řádcích stoupne úspěšnost na 97 \%. Přístupy aktivního učení dosahují lepší úspěšnosti než náhodný výběr. Pro zpracování datasetů je vhodné používat již natrénované neuronové sítě tak, aby se odstranilo co možná nejvíce chybných dat. Rekurentní vrstvy znatelně zvyšují úspěšnost sítí. Při adaptaci je výhodné využívat přístupů aktivního učení.

David Hél

Tax, Form, Web application

Uživatelská rozhraní

The goal of this project is to develop interactive forms for tax returns which are pretty easy to fill in for everyone without any experience in economy or the tax law. The main purpose is to create simple forms for different types of people (e. g., businessman, employee, or student). The official application for tax returns (EPO) provided by The Ministry of Finance of the Czech Republic is complex. It offers many options which are usually unimportant for common users. hence, it is often difficult to achieve the right result. This paper presents a new web application called “Přiznání” which solves the problem by providing a set of simple yes/no questions to the users. The questions are based on the experience of revenue officers and taxpayers who need to fill the forms every year. After the answering all these questions, the user can download a correctly filled form which can be send off to the revenue authority. At the current stage, the application provides the form for businessmen. It successfully generates the Personal Income Tax form—the official document containing the information about personal income tax. The forms for different types of people are being developed.

Nikola Valešová

Machine learning, Metagenomics, Bacteria classification, Phylogenetic tree, 16S rRNA, DNA sequencing, scikit-learn

Bioinformatika

This work deals with the problem of automated classification and recognition of bacteria after obtaining their DNA by the sequencing process. In the scope of this paper, a~new classification method based on the 16S rRNA gene segment is designed and described. The presented principle is based on the tree structure of taxonomic categories and uses well-known machine learning algorithms to classify bacteria into one of the connected classes at a~given taxonomic level. A~part of this work is also dedicated to implementation of the described algorithm and evaluation of its prediction accuracy. The performance of various classifier types and their settings is examined and the setting with the best accuracy is determined. Accuracy of the implemented algorithm is also compared to an existing method based on BLAST local alignment algorithm available in the QIIME microbiome analysis toolkit.

Ondřej Valeš

finite automata, tree automata, language equivalence, language inclusion, bisimulation, antichains, bisimulation up-to congruence

Testování, analýza a verifikace

Tree automata and their languages find use in the field of formal verification and theorem proving but for many practical applications performance of existing algorithms for tree automata manipulation is unsatisfactory. In this work a novel algorithm for testing language equivalence and inclusion on tree automata is proposed and implemented as a module of the VATA library with a goal of creating algorithm that is comparatively faster than existing methods on at least a portion of real-world examples. First, existing approaches to equivalence and inclusion testing on both word and tree automata are examined. These existing approaches are then modified to create the bisimulation up-to congruence algorithm for tree automata. Efficiency of this new approach is compared with existing tree automata language equivalence and inclusion testing methods.

Martin Vondráček

Network Traffic Analysis, Reverse Engineering, Application Crippling, Penetration Testing, Hacking, Social Applications, Unity, Bigscreen, HTC Vive, Oculus Rift

Bezpečnost Počítačové sítě

Immersive virtual reality is a technology that finds more and more areas of application. It is used not only for entertainment but also for work and social interaction where user's privacy and confidentiality of the information has a high priority. Unfortunately, security measures taken by software vendors are often not sufficient. This paper shows results of extensive security analysis of a popular VR application Bigscreen which has more than 500,000 users. We have utilised techniques of network traffic analysis, penetration testing, reverse engineering, and even application crippling. We have found critical vulnerabilities directly exposing the privacy of the users and allowing the attacker to take full control of a victim's computer. Found security flaws allowed distribution of malware and creation of a botnet using a computer worm. Our team has discovered a novel VR cyber attack Man-in-the-Room. We have also found a security vulnerability in the Unity engine. Our responsible disclosure has helped to mitigate the risks for more than half a million Bigscreen users and all affected Unity applications worldwide.

Son Hai Nguyen

Augmented Reality, Computer Vision, Image Processing

Zpracování dat (obraz, zvuk, text apod.)

Augmented reality visualizes additional information in real-world environment. Main goal is achieving natural looking of the inserted 2D graphics in a scene captured by a stationary camera with possibility of real time processing. Although several methods tackled foreground segmentation problem, many of them are not robust enough on diverse datasets. Modified background subtraction algorithm ViBe yields best visual results, but because of the nature of binary mask, edges of the segmented objects are coarse. In order to smooth edges, Global Sampling Matting is performed, this refinement greatly increased the perceptual quality of segmentation. Considering that the shadows are not classified by ViBe, artifacts were occurring after insertion of segmented objects on top of the graphics. This was solved by the proposed shadow segmentation, which was achieved by comparing the differences between brightness and gradients of the background model and the current frame. To remove plastic look of the inserted graphics, texture propagation has been proposed, that considers the local and mean brightness of the background. Segmentation algorithms and image matting algorithms are tested on various datasets. Resulted pipeline is demonstrated on a dataset of videos.

Martin Vondráček

Network Traffic Analysis, Reverse Engineering, Application Crippling, Penetration Testing, Hacking, Social Applications, Unity, Bigscreen, HTC Vive, Oculus Rift

Bezpečnost Počítačové sítě

Immersive virtual reality is a technology that finds more and more areas of application. It is used not only for entertainment but also for work and social interaction where user's privacy and confidentiality of the information has a high priority. Unfortunately, security measures taken by software vendors are often not sufficient. This paper shows results of extensive security analysis of a popular VR application Bigscreen which has more than 500,000 users. We have utilised techniques of network traffic analysis, penetration testing, reverse engineering, and even application crippling. We have found critical vulnerabilities directly exposing the privacy of the users and allowing the attacker to take full control of a victim's computer. Found security flaws allowed distribution of malware and creation of a botnet using a computer worm. Our team has discovered a novel VR cyber attack Man-in-the-Room. We have also found a security vulnerability in the Unity engine. Our responsible disclosure has helped to mitigate the risks for more than half a million Bigscreen users and all affected Unity applications worldwide.

Daniel Uhříček

IoT, Malware, Linux, Security, Dynamic Analysis, Network Analysis, SystemTap

Bezpečnost

Weak security standards of IoT devices levereged Linux malware in past few years. Exposed telnet and ssh services with default passwords, outdated firmware or system vulnerabilities -- all of those are ways of letting attackers build botnets of thousands of compromised embedded devices. This paper emphasizes the importance of open source community in the field of malware analysis and presents design and implementation of multiplatform sandbox for automated malware analysis on Linux platform. Project LiSa (Linux Sandbox) is a modular system which outputs json data that can be further analyzed either manually or with pattern matching (e.g. with YARA) and serves as a tool to detect and classify Linux malware. LiSa was tested on recent IoT malware samples provided by Avast Software and it solved various problems of existing implementations.

Dominik Harmim, Vladimír Marcin, Ondřej Pavela

Facebook Infer, Static Analysis, Abstract Interpretation, Atomicity Violation, Concurrent Programs, Performance, Worst-Case Cost, Deadlock

Testování, analýza a verifikace

Static analysis has nowadays become one of the most popular ways of catching bugs early in the modern software. However, reasonably precise static analyses do still often have problems with scaling to larger codebases. And efficient static analysers, such as Coverity or Code Sonar, are often proprietary and difficult to openly evaluate or extend. Facebook Infer offers a static analysis framework that is open source, extendable, and promoting efficient modular and incremental analysis. In this work, we propose three inter-procedural analysers extending the capabilities of Facebook Infer: Looper (a resource bounds analyser), L2D2 (a low-level deadlock detector), and Atomer (an atomicity violation analyser). We evaluated our analysers on both smaller hand-crafted examples as well as publicly available benchmarks derived from real-life low-level programs and obtained encouraging results. In particular, L2D2 attained 100 % detection rate and 11 % false positive rate on an extensive benchmark of hundreds of functions and millions of lines of code.

Son Hai Nguyen

Augmented Reality, Computer Vision, Image Processing

Zpracování dat (obraz, zvuk, text apod.)

Augmented reality visualizes additional information in real-world environment. Main goal is achieving natural looking of the inserted 2D graphics in a scene captured by a stationary camera with possibility of real time processing. Although several methods tackled foreground segmentation problem, many of them are not robust enough on diverse datasets. Modified background subtraction algorithm ViBe yields best visual results, but because of the nature of binary mask, edges of the segmented objects are coarse. In order to smooth edges, Global Sampling Matting is performed, this refinement greatly increased the perceptual quality of segmentation. Considering that the shadows are not classified by ViBe, artifacts were occurring after insertion of segmented objects on top of the graphics. This was solved by the proposed shadow segmentation, which was achieved by comparing the differences between brightness and gradients of the background model and the current frame. To remove plastic look of the inserted graphics, texture propagation has been proposed, that considers the local and mean brightness of the background. Segmentation algorithms and image matting algorithms are tested on various datasets. Resulted pipeline is demonstrated on a dataset of videos.

Nikola Valešová

Machine learning, Metagenomics, Bacteria classification, Phylogenetic tree, 16S rRNA, DNA sequencing, scikit-learn

Bioinformatika

This work deals with the problem of automated classification and recognition of bacteria after obtaining their DNA by the sequencing process. In the scope of this paper, a~new classification method based on the 16S rRNA gene segment is designed and described. The presented principle is based on the tree structure of taxonomic categories and uses well-known machine learning algorithms to classify bacteria into one of the connected classes at a~given taxonomic level. A~part of this work is also dedicated to implementation of the described algorithm and evaluation of its prediction accuracy. The performance of various classifier types and their settings is examined and the setting with the best accuracy is determined. Accuracy of the implemented algorithm is also compared to an existing method based on BLAST local alignment algorithm available in the QIIME microbiome analysis toolkit.